Shipping a user research platform with Cursor + Supabase | Li Xia

From no coding experience to technical founder in 2.5 years

Hey, I’m Colin! I help PMs and business leaders improve their technical skills through real-world case studies. Subscribe to paid to get access to the AI Product Circle community on Slack, my full Tech Foundations for PMs course (84 lessons), a prompt library for common PM task, and discounts for live cohort-based courses.

Li Xia, a product manager turned founder, has developed one of the most systematic approaches to AI-assisted development I’ve seen. Despite starting his coding journey just 2.5 years ago, he’s built and shipped Sondar.ai, an AI-native UX research platform that helps product teams conduct user testing and synthesize insights in minutes rather than hours.

His secret isn’t just using AI tools, but creating a repeatable process that transforms product requirements into working code through a series of structured steps. Let’s dive into how he does it.

Check out the full interview here:

The Product

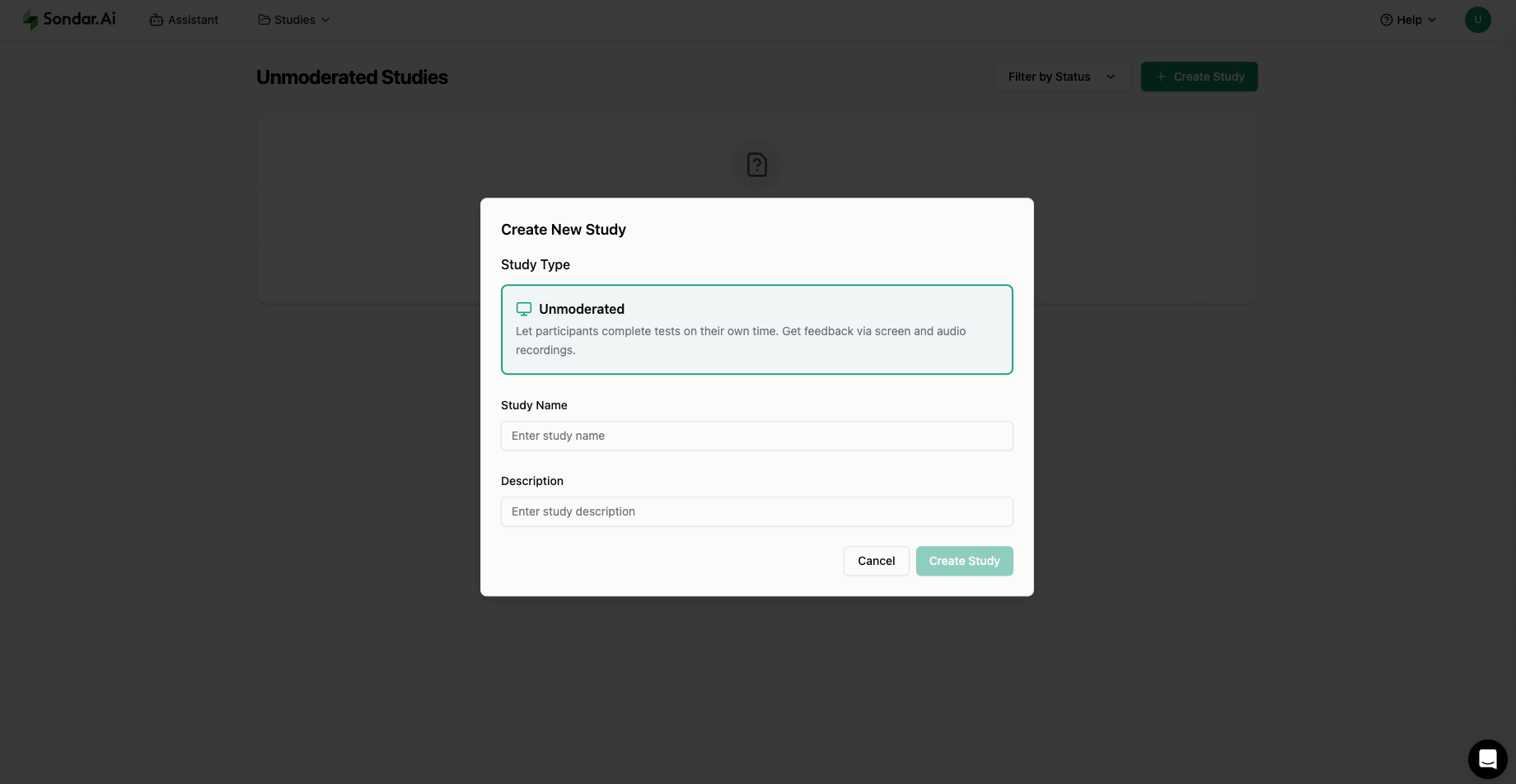

Sondar.ai addresses a problem Li knew intimately from his eight years in product management: teams struggle with customer discovery. His platform covers the entire research workflow, from planning studies and recruiting participants (accessing 200,000+ participants across 33 countries) to conducting research and using AI to synthesize insights from video recordings.

The platform can test everything from Figma prototypes to live websites, automatically transcribing sessions, extracting key insights, and organizing feedback into actionable categories. What used to take hours of manual analysis now happens in minutes.

The Process

Li’s approach to shipping features follows a methodical five-step process that maximizes AI effectiveness while maintaining quality control.

Step 1: PRD Generation with Chat PRD

Li starts every feature with a comprehensive Product Requirements Document, but he doesn’t write it from scratch. He uses Chat PRD, a tool by Claire Vo, which interviews him about the feature and generates a detailed PRD including business goals, user stories, functional requirements, and user experience flows.

“The PRD step is crucially important because a lot of that detail flows into the design process that follows,” Li explains. This document becomes the foundation for every subsequent step.

Step 2: Visual Design with UX Pilot

Rather than jumping straight to code, Li uses UX Pilot to transform PRD requirements into visual interfaces. He provides the AI with context from the PRD, screenshots of existing UI, and specific instructions about the feature.

The key insight: AI quality dramatically improves with context. Li includes not just feature descriptions but also design principles and system guidelines. “By combining what you are actually trying to build with the principles of your design system, you get much better quality output.”

Step 3: Engineering Task Breakdown

Here’s where Li’s process gets particularly sophisticated. He imports the PRD into Cursor and uses a custom prompt system to generate detailed engineering tasks. His “generate task file” contains specific instructions for breaking down features into parent tasks with detailed subtasks.

The AI creates a comprehensive task list that includes database changes, frontend modifications, and testing requirements. Li reviews this carefully because “once we get into the build process, this is really the blueprint it’s using to turn these prompts into actual code.”

Step 4: Systematic Implementation

Li uses another custom prompt file called “Action Tasks” that instructs the AI to work through the task list one item at a time, asking for review before moving to the next task. This prevents the AI from attempting to build everything at once and failing.

“By having these clear steps, it really helps with that consistency and creates alignment between you and the AI,” he notes. When bugs appear, he can easily restore to a previous checkpoint and retry with different instructions.

Step 5: Iterative Testing and Deployment

Li’s tech stack, Supabase for the backend, Vue 3 for the frontend, and Vercel for deployment, supports rapid iteration. He runs everything locally first, then pushes to staging and production environments as needed.

What Makes This Work

Li’s success comes from treating AI as a team member rather than a magic wand. He’s developed several key practices:

Explicit Instructions: “Sometimes the AI’s quite finicky. Being explicit definitely helps.” Li includes detailed system prompts and repeats critical requirements even when they’re already in his stored prompts.

Reusable Prompt Templates: Li maintains a library of prompt files for different tasks: PRD generation, task breakdown, implementation guidelines. “It’s just a really scalable way to reuse those prompts again and again for every feature that I build.”

One Task at a Time: Rather than asking AI to build entire features, Li breaks everything into discrete, reviewable steps. This makes debugging much easier and prevents cascade failures.

Context Management: Every conversation includes the relevant PRD, task lists, and system prompts. Li ensures the AI always has the full context needed to make good decisions.

What He’d Do Differently

Li’s process has evolved through trial and error. He’s learned that larger features benefit from this systematic approach, while smaller features can be built more casually. He’s also discovered that different AI models excel at different tasks—he’s recently started using Gemini alongside Claude for certain coding tasks.

The most important lesson: investing time upfront in planning and task breakdown prevents much larger debugging efforts later. “Without these detailed steps, the agent will go off in a certain direction, do something, and maybe the output isn’t what you’re expecting.”

Li’s journey from UX designer to technical founder took just 2.5 years, starting with no-code tools like Bubble before transitioning to actual programming. His advice for other product people: Find a project you really want to build and take it one step at a time.

He built productivity tools for his own workflow first, learning database structure and API interactions through Bubble before moving to more complex development. The key was learning incrementally while building real projects rather than following abstract tutorials.

The Results

Li has been shipping features into production for the past 18 months using this process. The systematic approach lets him move from idea to working feature rapidly while maintaining code quality. Most importantly, the process scales—each new feature benefits from the accumulated prompt templates and workflows.

For product managers interested in trying Sondar.ai for their own user research, it’s live at sondar.ai. And for those inspired to build their own AI-assisted development process, Li’s approach shows that success comes not from AI magic, but from treating these tools as powerful team members that need clear instructions and systematic workflows.