How to build an AI-enabled product

Learn practical approaches to incorporate AI in your product

Hey, I’m Colin! I help PMs and business leaders improve their technical skills through real-world case studies. For more, check out my Live cohort course and subscribe on Substack.

Generative AI has taken over the internet. If you’re a PM who hasn’t worked on AI features, it can feel like you’re getting left behind.

In this article, we'll review generative AI capabilities and how Gen AI is impacting companies today. Then we’ll build our own AI-powered product from the ground up. Let’s get started!

What is AI?

Generative AI Models

Today, most references to AI are referring to generative AI. This is a subset machine learning techniques focused on the machine generation of text or media.

Gen AI models are expensive to train so the vast majority of companies use 3rd party models rather than build their own. Providers include companies like OpenAI, Anthropic, Meta, and more.

There are various types of generative models and features. These include:

General Text - non-structured text input and output (GPT-4, Claude 3.5, Llama 3.2)

Images - text input to image output (DALL-E, Flux, Midjourney)

Structured Data - structured text output like JSON or code

Multi-modal - input can be images or text

Effectively incorporating Gen AI models into your product can have an outsized impact. One of the best examples is StackBlitz, who launched a new product and reached $4M ARR in 4 weeks by using Claude. They used zero-shot code generation, meaning there were no complex RAG pipelines or multi-agent workflows powering their product. With an existing platform, a few API integrations, and a well-thought out pattern for structuring code and taking specific actions, StackBlitz launched an industry-defining product.

Let’s dive into how we can do the same.

Using Gen AI

Using generative AI models is much simpler than it seems. Typically, these models are hosted by the company who created them (OpenAI, Antropic, etc). From your product, you send over an API request with some instructions and the user provided content. The instructions provide the structure and the user request tells the model what content to create.

A typical workflow would be to pass the request from your client to your server, then over to the Gen AI provider. This keeps the communication secure between your product and the GenAI provider.

Creating high quality prompts is an art and science. There are common patterns for prompting but often it is a process of trial and error to produce better results.

Customizing GenAI Models

Many products can be enhanced with generative AI without much customization. Well crafted prompts that fit into an existing product or workflow can automate much of the typical work needed.

When models do need to customized, there are a few common approaches:

N-shot prompting

Fine-tuning

Retrieval Augmented Generation

N-shot prompting

N-shot prompting refers to adding one or more example outputs to the prompt. Providing clear examples of inputs and outputs guides the model to create content that more closely matches the expected outputs. One-shot refers to adding a single example, and N-shot refers to more than one.

Fine-tuning

Not all genAI models support fine tuning. If the model does, you can train the model on many more examples than can fit in a prompt. This requires more upfront work but can result in a model that performs better for your use case.

Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation is a side-step from prompting and fine-tuning. RAG allows you to create a database of content that the model will refer to when creating responses. This allows you to point to specific documents or context as the source of a response generated by an LLM. RAG is more complex to set up and maintain, but provides a method of leveraging a vast amount of external data on the fly.

Here’s a deeper dive into RAG if you’re interested: https://blogs.nvidia.com/blog/what-is-retrieval-augmented-generation/

Example: Build Your Own AI-enabled PRD Writer

Requirements

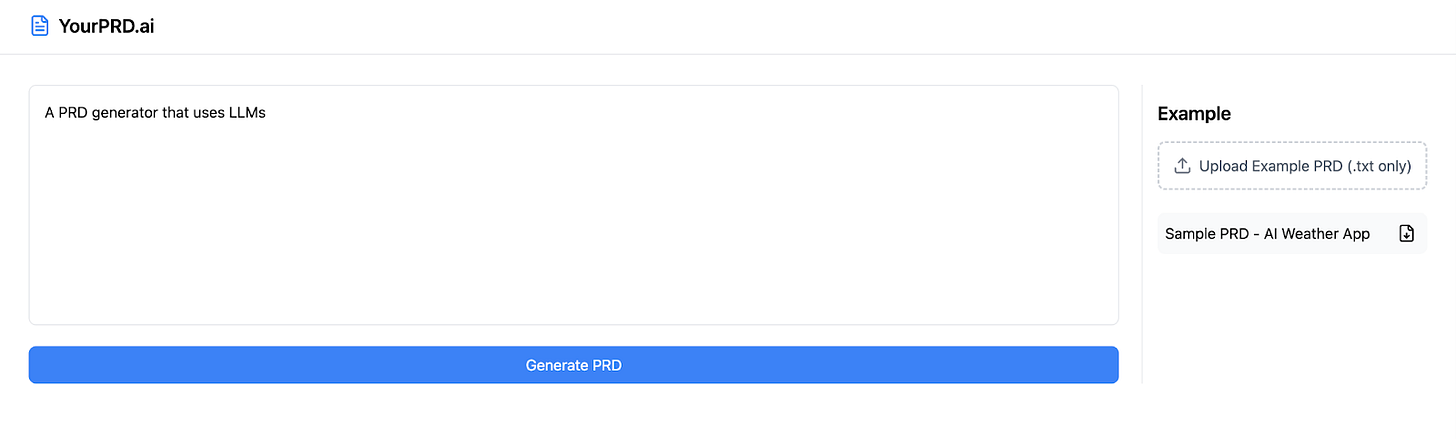

Let’s build our own AI-enabled product: a one-shot PRD generator. This will take a single PRD upload as an example (our one-shot) and the user’s input. It will add both to the API request to the GenAI provider and expect to get a PRD in response that matches the user’s request but is formatted based on our example.

Our requirements will be:

Users can upload a single example PRD as .txt (easier to handle than PDF or DOC)

Users can enter a prompt for a requested PRD generation

The system will structure the request as a POST request to our server with the content

Our server will integrate with the GenAI provider to generate the new PRD in markdown

The new PRD will be displayed in an editor

(Nice-to-have) - the main PRD sections will be summarized in a right panel

Design

Here’s a simple mockup of our product I made using Excalidraw. You can use whatever products you’re most comfortable with for this step.

Technical Implementation

Our product requires both a client and a server. We’ll create the client using Bolt.new, and run our server on Activepieces. Activepieces already has pre-built integrations with most AI providers, so we can easily set that up.

Below you’ll find a few prompts I used throughout the process of building in Bolt:

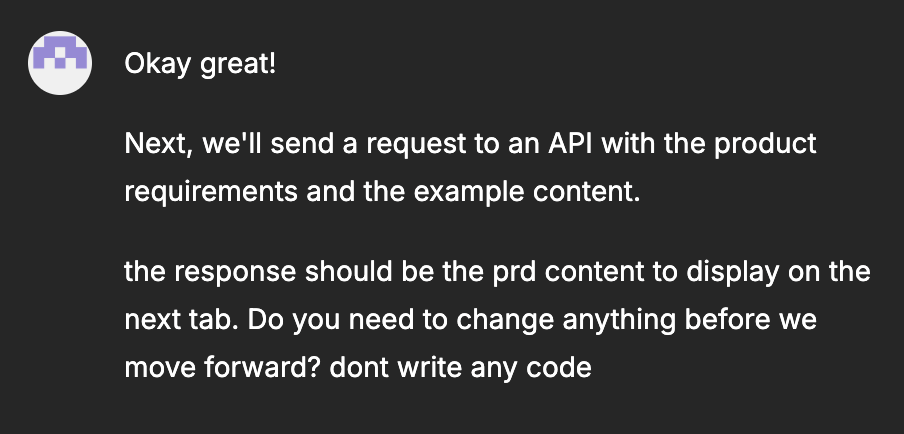

And my workflow in Activepieces:

MVP

In about 20 minutes, I was able to create an MVP of our PRD generator using Bolt.new You can give it a try here.

Build your own

Want to build your own AI-enabled app? Sign up for my free 5-day email course to build an AI-enabled todo app using Bolt in less than a week!

Putting it all together

AI features can transform your product, but only if implemented thoughtfully. StackBlitz proved this by reaching $4M ARR in just 4 weeks with a single AI integration. The key? They kept it simple - using existing models, crafting good prompts, and enhancing their core product value rather than trying to reinvent it.

Start small, focus on user value, and iterate based on feedback. That's how you turn the AI hype into real business results.

@colin, thanks for writing this great article. I have been trying to properly set up the flow in Activepieces, but I fail to do so. Will you write an article to guide how to set up the flow in Activepieces to call the LLM provider APIs?

On the Bolt side, my understanding is that once you define the flow with a Catch Webhoock trigger, you add the generated url by Active pieces to the code. Is that correct? And then bolt knows where to add it in the code.

A few things I struggled if that helps:

- How to tell to the webhook to get the user input from the client side? I didnt see any field?

- Should the webhook be integrated?

- In the action part, what should I write in the jason text box? Also, how should I differentiate between system prompt and user prompt? How should I tell the Action that it should get the user input from the Catch Webhook