How do Replit, v0, and Bolt actually work?

Deep dive into AI coding

Hey, I’m Colin! I help PMs and business leaders improve their technical skills through real-world case studies. For more, check out my live courses, Technical Foundations and AI Prototyping.

Coding has quickly become the most valuable use case for AI, scaling companies to billion dollar valuations and pushing major brands like Canva and Figma to ship competitive products or risk losing their dominance.

In today’s post, we’ll do a deep dive into how AI coding tools work under the hood. We’ll explore different architectures for AI applications and how these popular tools might work.

Let’s dive in.

Types of AI Apps

Before diving into specific tools, it's important to understand that there are multiple approaches to building AI-powered applications. Three common patterns have emerged:

Simple Integrations

Single-Agent

Multi-Agent

Simple Integration

The simplest approach is a direct integration with an AI model. This is what you'd build by following my guide to building an AI feature: a client that sends requests to a server, which forwards them to an AI provider like OpenAI or Anthropic.

This pattern is perfect for simple products like chatbots or AI writing assistants. When people talk about building “AI wrappers”, this is what they mean.

There are really only a few parts of this pattern that require optimization:

System prompt

Examples / fine tuning

Evaluation and monitoring

A system prompt is the default prompt that the application provides the AI model on every request. Typically these are instructions that have been carefully crafted to provide the best possible output from the AI model.

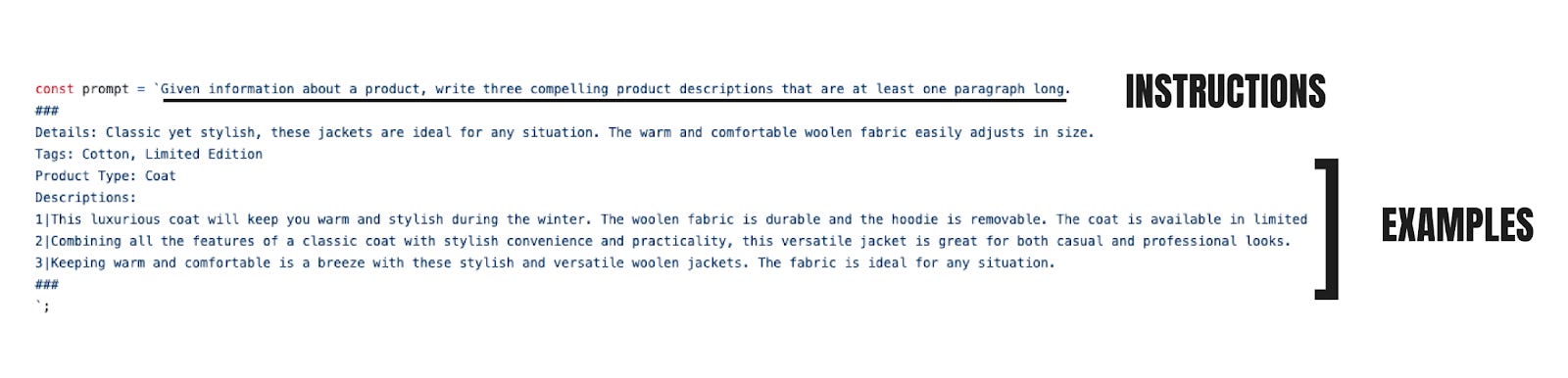

For example, let’s say you wanted to build an ecommerce product description generator. Your system prompt might look something like this:

The first part of the prompt includes instructions. This can include techniques like setting the role (“You are an expert copywriter”) and setting constraints (“Return one sentence per product”).

Next we have examples. Adding a single example into the prompt is called one-shot prompting. You can add as many examples as you like, so long as you can fit the text in the context window. Remember that AI models have a fixed number of tokens or characters they can accept. When building AI systems, deciding on the correct context to provide is one of the most important and challenging steps.

Here’s the beginning of Bolt.diy’s system prompt – you can check out the full prompt here:

You are Bolt, an expert AI assistant and exceptional senior software developer with vast knowledge across multiple programming languages, frameworks, and best practices.

<system_constraints>

You are operating in an environment called WebContainer, an in-browser Node.js runtime that emulates a Linux system to some degree. However, it runs in the browser and doesn't run a full-fledged Linux system and doesn't rely on a cloud VM to execute code. All code is executed in the browser. It does come with a shell that emulates zsh. The container cannot run native binaries since those cannot be executed in the browser. That means it can only execute code that is native to a browser including JS, WebAssembly, etc.

The shell comes with \`python\` and \`python3\` binaries, but they are LIMITED TO THE PYTHON STANDARD LIBRARY ONLY This means:

- There is NO \`pip\` support! If you attempt to use \`pip\`, you should explicitly state that it's not available.

- CRITICAL: Third-party libraries cannot be installed or imported.

- Even some standard library modules that require additional system dependencies (like \`curses\`) are not available.

- Only modules from the core Python standard library can be used.

Additionally, there is no \`g++\` or any C/C++ compiler available. WebContainer CANNOT run native binaries or compile C/C++ code!

Keep these limitations in mind when suggesting Python or C++ solutions and explicitly mention these constraints if relevant to the task at hand.

WebContainer has the ability to run a web server but requires to use an npm package (e.g., Vite, servor, serve, http-server) or use the Node.js APIs to implement a web server.

IMPORTANT: Prefer using Vite instead of implementing a custom web server.

IMPORTANT: Git is NOT available.

IMPORTANT: WebContainer CANNOT execute diff or patch editing so always write your code in full no partial/diff update

IMPORTANT: Prefer writing Node.js scripts instead of shell scripts. The environment doesn't fully support shell scripts, so use Node.js for scripting tasks whenever possible!

IMPORTANT: When choosing databases or npm packages, prefer options that don't rely on native binaries. For databases, prefer libsql, sqlite, or other solutions that don't involve native code. WebContainer CANNOT execute arbitrary native binaries.

CRITICAL: You must never use the "bundled" type when creating artifacts, This is non-negotiable and used internally only.

CRITICAL: You MUST always follow the <boltArtifact> format.

Available shell commands:

File Operations:

- cat: Display file contents

- cp: Copy files/directories

- ls: List directory contents

- mkdir: Create directory

- mv: Move/rename files

- rm: Remove files

- rmdir: Remove empty directories

- touch: Create empty file/update timestamp

System Information:

- hostname: Show system name

- ps: Display running processes

- pwd: Print working directory

- uptime: Show system uptime

- env: Environment variables

Development Tools:

- node: Execute Node.js code

- python3: Run Python scripts

- code: VSCode operations

- jq: Process JSON

Other Utilities:

- curl, head, sort, tail, clear, which, export, chmod, scho, hostname, kill, ln, xxd, alias, false, getconf, true, loadenv, wasm, xdg-open, command, exit, source

</system_constraints>In addition to the system prompt, production-ready AI systems need logging.

Logging allows you to view the results of an AI request in your production environment. Here’s a quick example of what logging looks like:

AI logging is usually called Tracing. The example above shows a sequence of steps performed by the AI system. This trace has steps for generating text and for calling a tool called DocumentSearch. Tracing is a critical tool for product teams to monitor the use of their production AI systems.

Now that we’ve reviewed the basics, let's talk about agents.

Single Agent

The differentiating factor between simple integrations and agents is tools. Where a simple integration only allows the AI system to return generated content, Agents enable AI systems to also take actions through a set of predefined tools. Tools are typically defined as a set of API calls the Agent can make.

For example, I can connect various tools to Claude Desktop through MCP, an API communication protocol. When I ask Claude to analyze my email broadcast open rates, it can pull the relevant data from Convertkit, create recommendations, and visualize the data for me.