Designing a Scalable Social Media Scheduling App

A case study in technical tradeoffs

Hey, I’m Colin! I help PMs and business leaders improve their technical skills through real-world case studies. For more, check out my Live cohort course and subscribe on Substack.

Today, we’ll design the social media scheduling app Buffer from the ground up, then explore how PMs can better manage tradeoffs by improving their system design skills. Let’s dive in!

Understanding Our Core Features

Before we dive into architecture, let's document Buffer’s core features:

Create and store social media posts

Generate AI-powered post suggestions

Post to multiple social platforms at scheduled times

We'll build these features one at a time, seeing how each new requirement shapes our architecture.

Starting Simple: Creating and Storing Posts

Let's begin with the most basic feature - allowing users to create and save posts for later. At its simplest, we need a client, server, and database:

Our client sends a POST request to create new posts:

POST /posts

{

"content": "Check out our new feature!",

"scheduledTime": "2024-02-15T10:00:00Z"

}The server stores this in our database with additional metadata:

{

"id": "post_123",

"content": "Check out our new feature!",

"scheduledTime": "2024-02-15T10:00:00Z",

"status": "scheduled",

"createdAt": "2024-02-14T15:30:00Z"

}Pretty easy so far!

Adding AI Content Generation

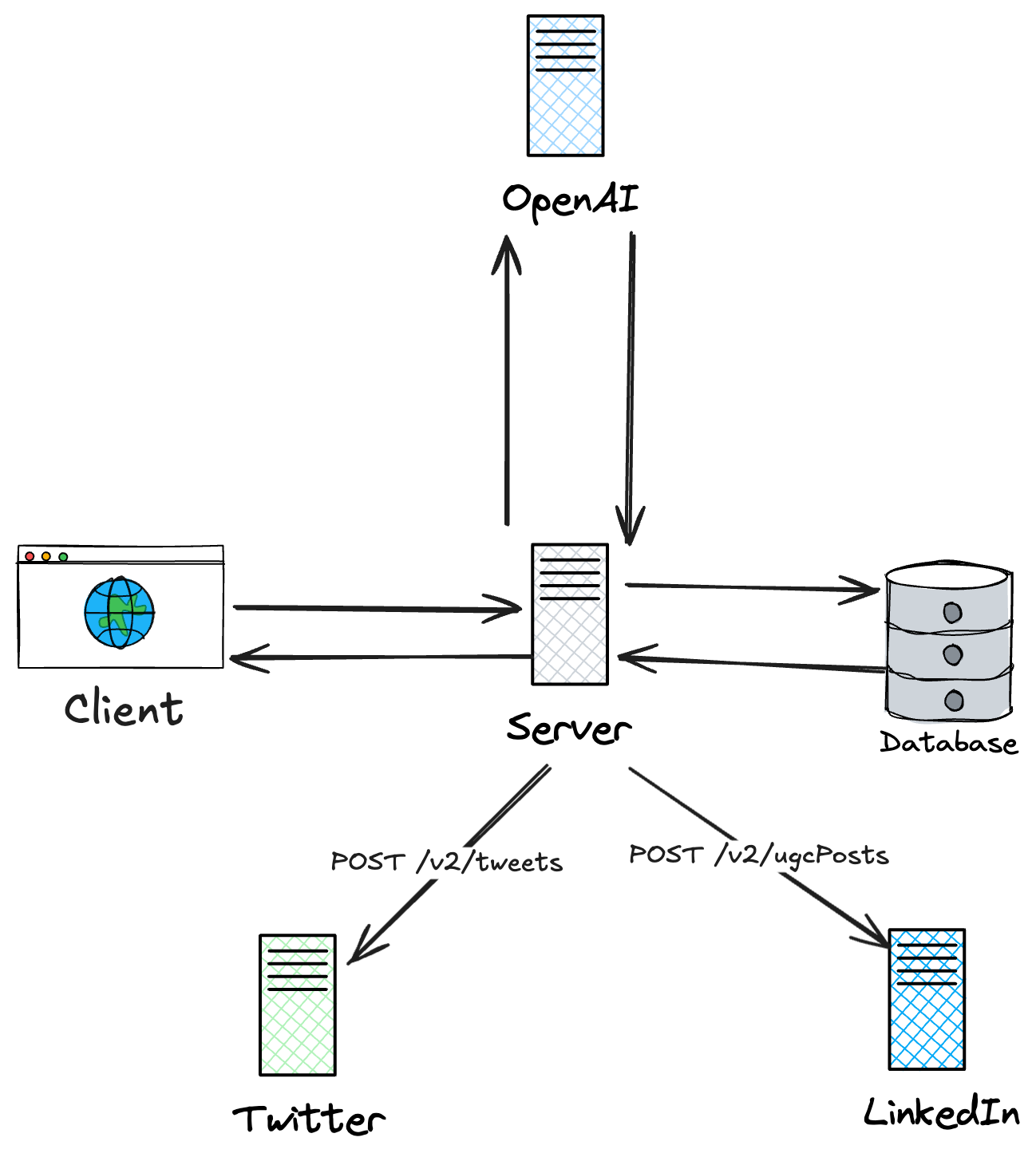

Next, let's add AI-powered suggestions. This introduces our first external integration - OpenAI:

Our client would make a request to our server, asking for AI generated content:

POST /posts/generate

{

"prompt": "Write a tweet about our new mobile app launch"

}Then, our server forwards this to OpenAI with additional context:

{

"model": "gpt-4",

"messages": [

{

"role": "system",

"content": "You are a social media expert. Write engaging, platform- appropriate content."

},

{

"role": "user",

"content": "Write a tweet about our new mobile app launch"

}

]

}Once this request completes, the client receives the AI content. We can use the prior workflow to save the AI generated post to our database with any edits we applied.

Supporting Multiple Social Platforms

Now for our core feature - posting to social media. We need to integrate with multiple platforms, like LinkedIn and Twitter:

Each platform has its own API requirements, but they fundamentally work the same way. We’ll need to send a POST requests with the user’s content:

Twitter - POST /2/tweets

{

"text": "Check out our new feature!"

}LinkedIn - POST /v2/ugcPosts

{

"author": "urn:li:person:123",

"lifecycleState": "PUBLISHED",

"specificContent": {

"com.linkedin.ugc.ShareContent": {

"shareCommentary": {

"text": "Check out our new feature!"

}

}

}

}These integrations allow us to push content to the relevant platform at the right time. But how do we trigger a request at the scheduled time?

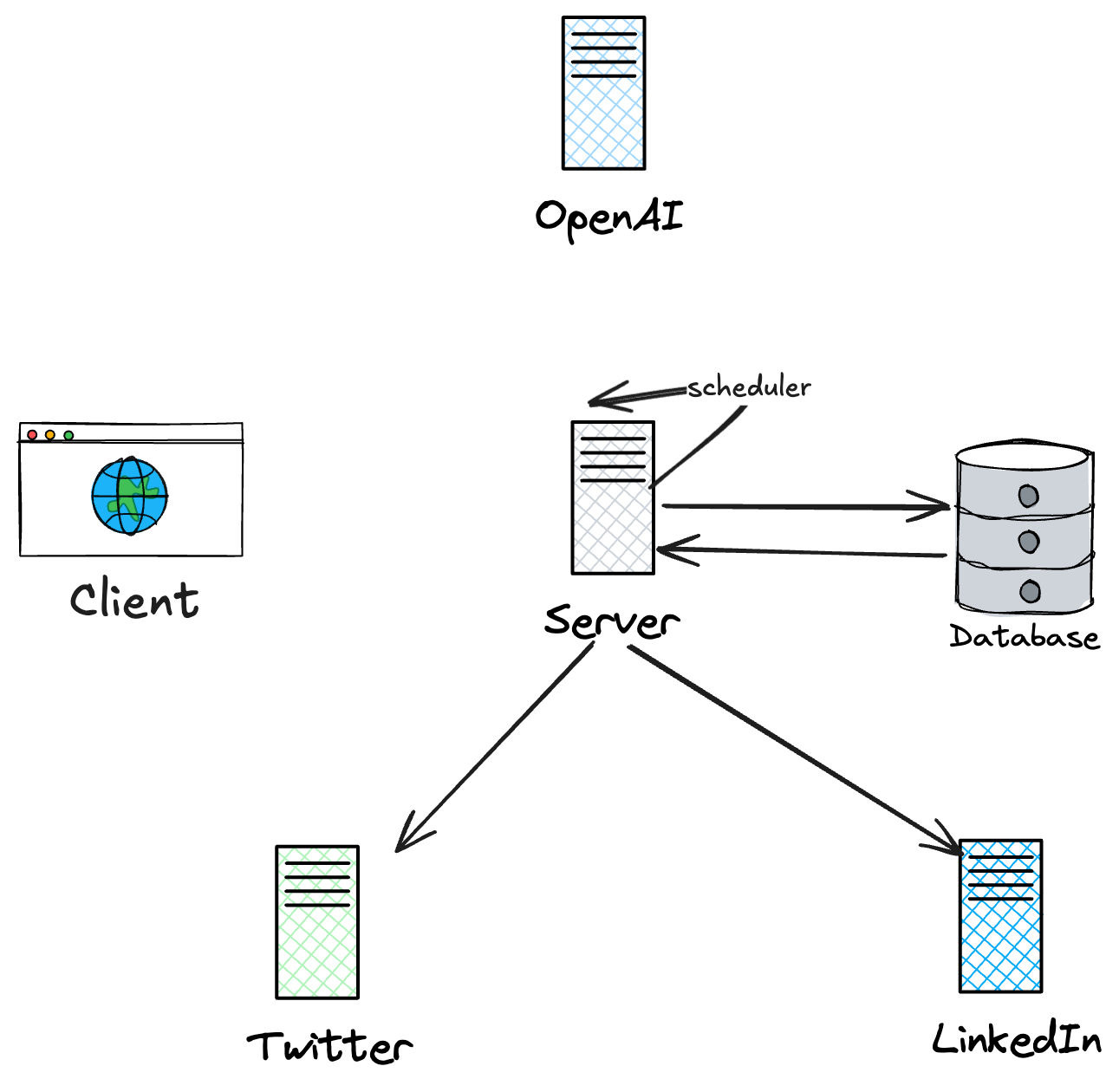

Handling Scheduled Posts

To handle posting at specific times, we need a scheduling system. There’s a variety of options out there but cron jobs are the most common tool for this type of task.

We’ll run the job on our server every minute to check if any posts should be sent at that time. If we find one, we’ll trigger a request to the relevant social platform.

After we successfully send the post, we’ll update the post’s status in our database.

Now Let's Talk About Scale

Our architecture now supports all core features, but what happens when we have thousands of concurrent users? This introduces new challenges with our system design and external integrations.

Let's address these challenges one by one.

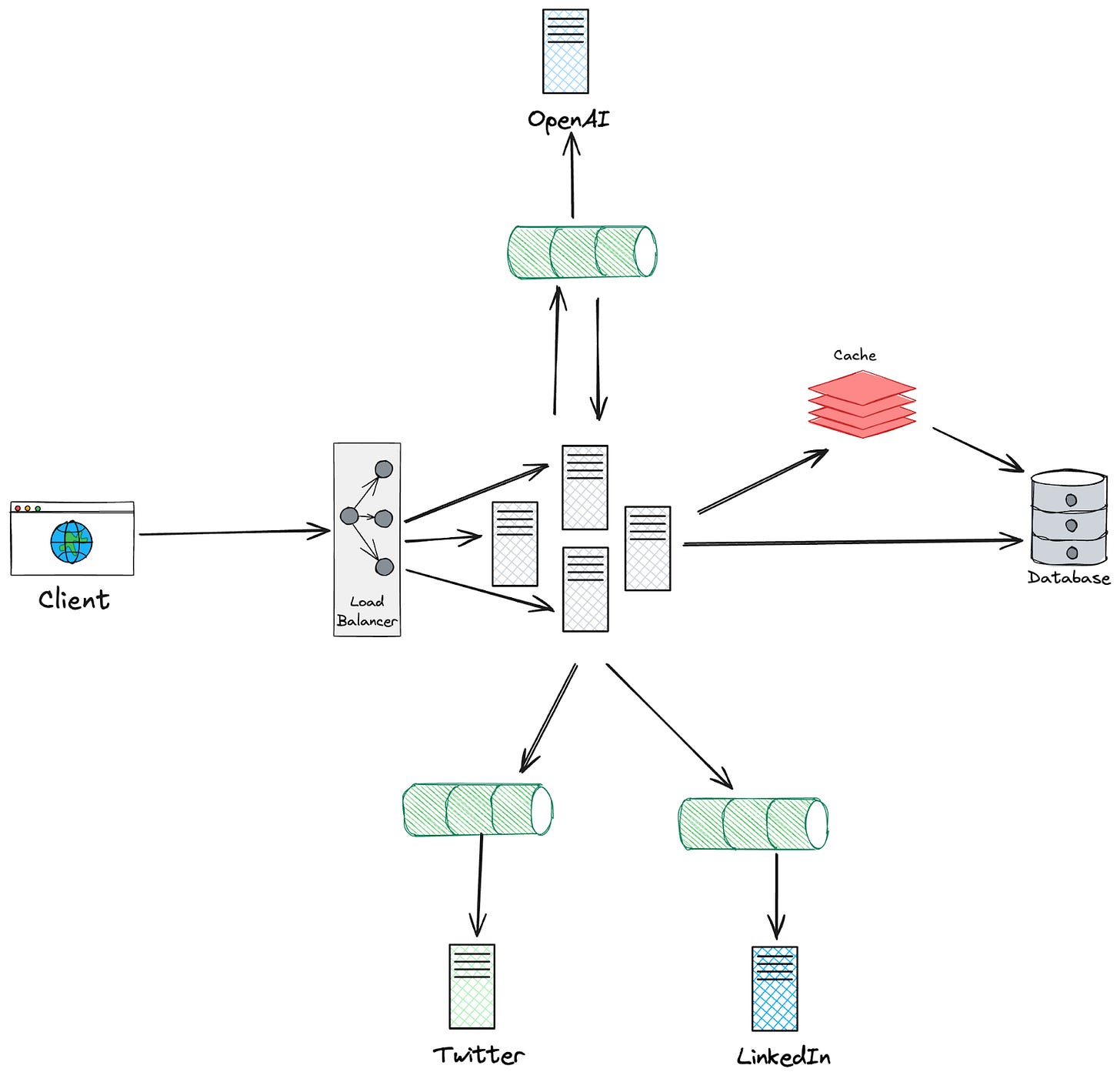

First, we'll add horizontal scaling and load balancing. Horizontal scaling adds additional servers, ensuring we can process requests from all of our customers. Load balancing allows us to split those requests amongst our various servers.

This allows us to distribute load across multiple servers, scale up or down based on demand, and maintain availability if a server fails.

With more servers making database requests, we may need to add a database cache. A cache temporarily stores some of the database data. When the cache is used, it returns information much faster than the database, speeding up the user experience.

Finally, we need queues to manage external API interaction. Queues provide us with storage for requests. We use queues to better separate systems and reduce the risk of failure, especially with external integrations.

Once requests arrive in the queue, we work them at a fixed rate to ensure we don’t exceed the rate limits placed by OpenAI, Twitter, or LinkedIn. This also allows us to easily retry requests if they fail.

Managing Technical Tradeoffs

At this point, we’ve designed a robust social media scheduling platform. As the PM running this product, you likely would have encountered many technical tradeoffs as you implemented new features and handled increasing user counts.

A fundamental tradeoff in system design is between building something simple that works today and something complex that will scale tomorrow. Going back to Buffer’s AI, feature, you have two choices on implementation:

Basic:

Client -> Server -> OpenAI APIScalable:

Client -> Load Balancer -> Multiple Servers -> Queue -> OpenAI API

The second approach is more "correct" from a technical perspective, but it could take weeks longer to build. This is what technical tradeoffs are all about.

Engineers will often have strong opinions about architectural decisions, and for good reason - they're the ones who have to maintain the system. You can help guide these decisions by:

Clearly communicating business constraints and priorities

Providing concrete scale requirements and growth projections

Being explicit about reliability requirements for different features

Helping evaluate the tradeoff between time-to-market and scalability

Supporting progressive scaling approaches when appropriate

The key is to frame technical decisions in terms of business impact. Instead of asking "Should we use queues?", ask "What's the business impact of occasional failed posts vs. delaying the launch by two weeks to implement queues?"

By understanding these tradeoffs and their business implications, you can have more productive conversations with your engineering team and make better decisions for your product.

Putting it all together

As we've explored Buffer's journey from a simple posting service to a sophisticated scheduling platform, the most important lesson isn't about queues, caches, or load balancers - it's about understanding that good system design comes from truly listening to your users' needs and thoughtfully evolving your architecture to meet them.

Every technical decision shapes the experience of your users and the daily work of your engineering team, so aim to build systems that solve real problems rather than theoretical ones.

Wow, I am not technical but this reads like a lovely simple story - thoroughly enjoyed it and learned lots, thank you!

Nice visual walkthrough Colin! I tell people all the time that an architectural understanding of your product is becoming even more important with the rise of prompt driven development. This is a great primer for that type of thinking