Build With Me: A Live Multimodal Teaching Assistant

Build your own AI assistant in 30 minutes or less

Hey, I’m Colin! I help PMs and business leaders improve their technical skills through real-world case studies. For more, check out my live courses, Technical Foundations and AI Prototyping.

You’ve probably seen the recent news about Google's newest model, Gemini 2.0.

Gemini comes with some pretty cool features out of the box, like Google Search and real-time multimodal communication. This means you can talk to the model, share your screen, and prompt it to search the internet.

I decided to enhance a demo created by Google to make an AI teaching assistant. My app takes in a transcript as context, then answers questions on APIs, system design, and more.

Check it out (unmute for the full effect):

In this post, I’ll walk you through step-by-step how I built this. If you haven’t played around with AI models yet, find 30 minutes this weekend to give this a try.

Key Technologies

Here’s how our AI app is going to work:

Client-side app that accepts audio, video, and text

Websocket to send inputs in realtime to Google, and receive outputs in real-time from Google

That’s it!

We’re going to use Bolt.new to get up and running quickly. Bolt is an AI-powered IDE that can write code for us with simple prompts.

Setting Up the Project

Getting started requires two main steps: setting up your development environment and getting your API key.

First, import the project into Bolt:

Go to bolt.new/github.com/google-gemini/multimodal-live-api-web-console . This will automatically import the starter project from Google into Bolt

You'll need to manually create a package.json file and copy the contents from the GitHub repository. To do so:

Find the package.json file in the GitHub repo above (click the link above)

Copy the content

In Bolt, right-click on Files and select ‘Add file’

Paste the content and name the file ‘package.json’

Your files should look like this once complete:

Let Bolt know to install and run the app.

Then, get your API key from Google’s AI Studio:

Visit AI Studio (https://aistudio.google.com/apikey)

Create a new API key if you don't have one

Create a .env file in your project (you can ask Bolt to do this)

Add your API key: REACT_APP_GEMINI_API_KEY=your_key_here

Here’s how this should look:

(Don’t forget to delete your API key after you wrap up! It’s better not leave it up if you have no continued use for it, and you can always create a new one.)

You might see some initial npm errors about missing package.json - that's expected until you create it manually. Once you have your API key added, you'll be ready to start development.

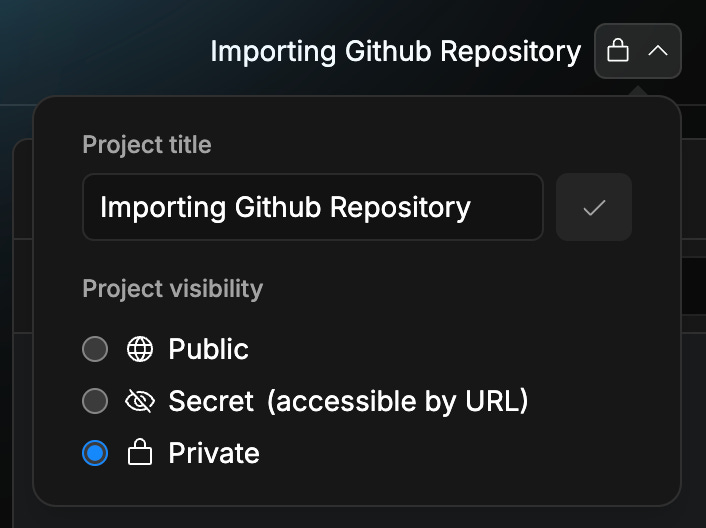

The final step is to mark your project as private at the top of the screen.

From here you should have a basic implementation set up! Give it a try by pressing play and asking the AI a question.